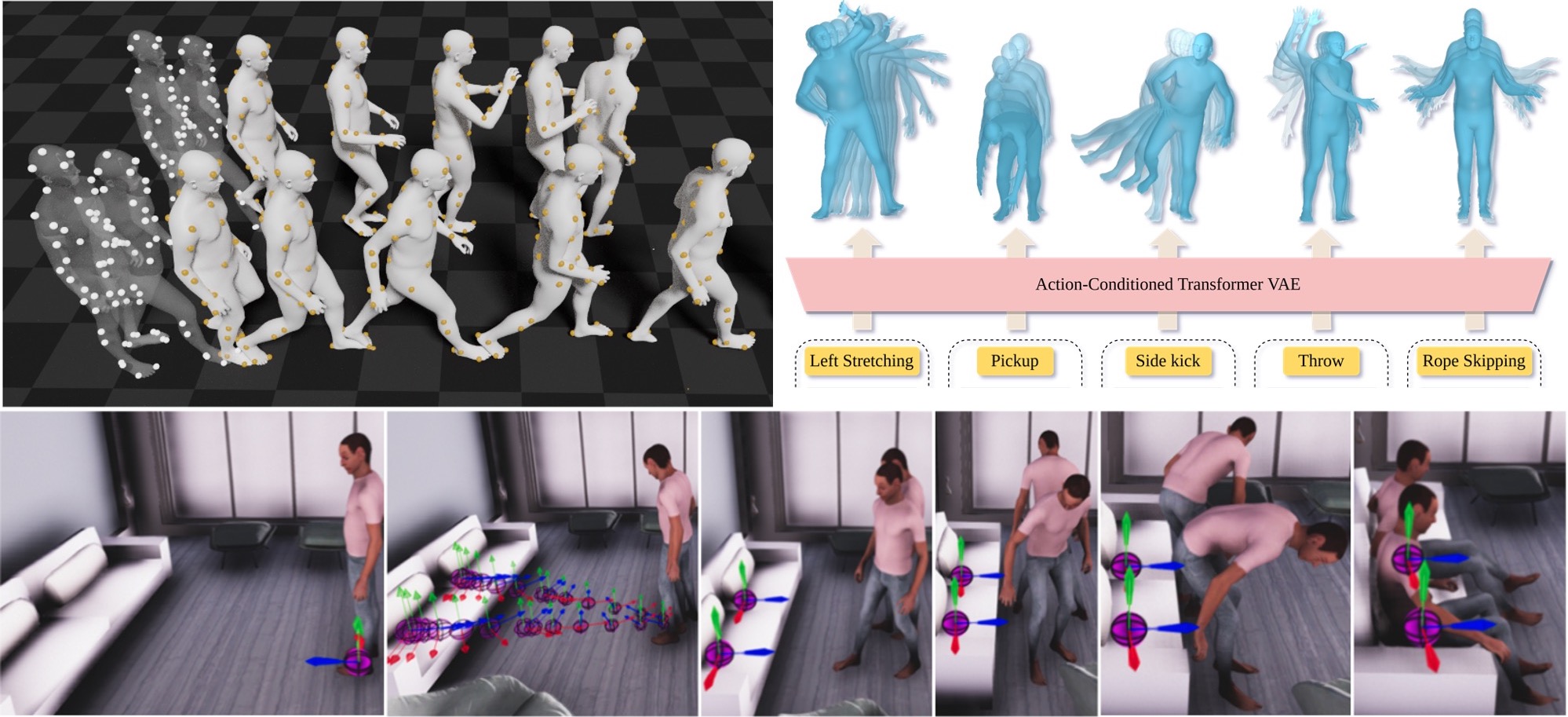

Clockwise from upper left: MOJO [ ] predicts human movement given past movement. ACTOR [ ] generates diverse human movements conditioned on an action label. SAMP [ ] produces human motions to satisfy goals like "sit on the sofa".

A key goal of Perceiving Systems is to model human behavior. One way of testing our models is by generating movement.

One class of motion generation methods takes a short segment of human motion and predicts future motions; this is a classical time-series prediction problem but with unique physical constraints. We observed that many prediction methods suffer from "regression to the mean" and that state-of-the-art performance can be achieved by a simple baseline that does not model motion at all [ ]. We have explored RNN-based methods [ ] and a simple encoder/decoder architecture [ ] to take past poses and predict future ones.

While prior work focuses on predicting joints, we note that these can be thought of as a very sparse point cloud; i.e., motion prediction methods are point-cloud predictors. Unfortunately, over time, these points tend to deviate from valid body shapes. Instead of joints, with MOJO [ ], we predict virtual markers on the body surface. This allows us to fit SMPL to them at each time step, effectively projecting the solution back onto the space of valid bodies.

In many cases, we want to generate motion corresponding to specific actions. ACTOR [ ] does this using an action-conditioned transformer VAE. By sampling from the VAE latent space, and querying a certain duration through a series of positional encodings, we synthesize diverse, variable-length motion sequences conditioned on an action.

Most synthesis methods, like those above, know nothing about the 3D scene. SAMP [ ], in contrast, generates motions of an avatar through a novel scene to achieve a goal like "sit on the chair". SAMP uses a GoalNet to generate object affordances, such as where to sit on a novel sofa. A MotionNet sequentially predicts body poses based on the past motion and the goal, while an A* algorithm plans collision-free paths through the scene to the goal.

While ACTOR and SAMP take steps towards generating movement from high-level goals, many motions are governed by physics. Hence, we also explore physics-based controllers of human movement [ ]. We envision a future that combines the best of both approaches with learned models of behavior combined with physical constraints coming from environmental interaction.