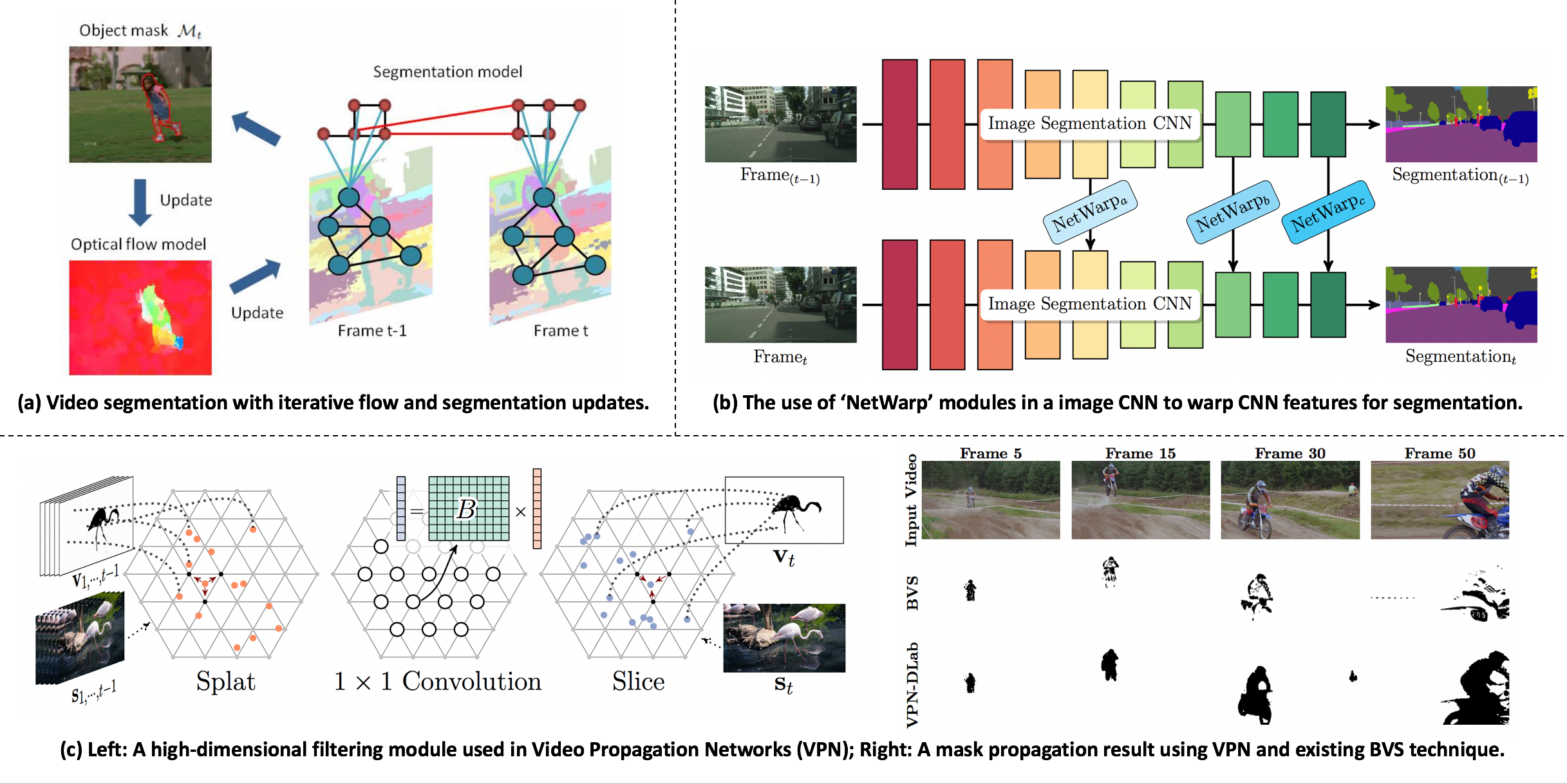

Illustration of different video segmentation and propagation techniques: (a) Object Flow [ ]. (b) NetWarp [ ]. (c) Video Propagation Networks [ ].

Videos provide a much richer scene information compared to still images. Despite this, most existing techniques for video segmentation are dominated by per-frame techniques. Video segmentation is a challenging problem due to fast moving objects, deforming shapes and cluttered backgrounds. At Perceiving Systems, we study the use of motion information or pixel correlation that is present across video frames to overcome some of these challenges and obtain better video segmentations.

In [ ], we propose an efficient algorithm that considers video segmentation and optical flow estimation simultaneously. We formulate a principled, multiscale, spatio-temporal objective function that uses optical flow to propagate information between frames. For optical flow estimation, we compute the flow independently in the segmented regions and recompose the results. We call the process "object flow" and demonstrate the effectiveness of jointly optimizing optical flow and video segmentation using an iterative scheme.

We also propose one of the first deep neural networks that can be used for general information propagation across video frames. In [ ], we project video pixels into a six dimensional XYRGBT space and learn a deep network in this high-dimensional space thereby learning the efficient long-range information propagation across several video frames. Experiments on video object segmentation, video color propagation and semantic video segmentation demonstrate the generality and the effectiveness of our video propagation network.

More recently, we propose a fast and lightweight neural network module called "NetWarp" [ ] that can learn to warp intermediate deep feature representations across video frames for better semantic segmentation. Introducing these NetWarp modules in already trained networks and then fine-tuning results in consistent improvements in segmentation accuracy.