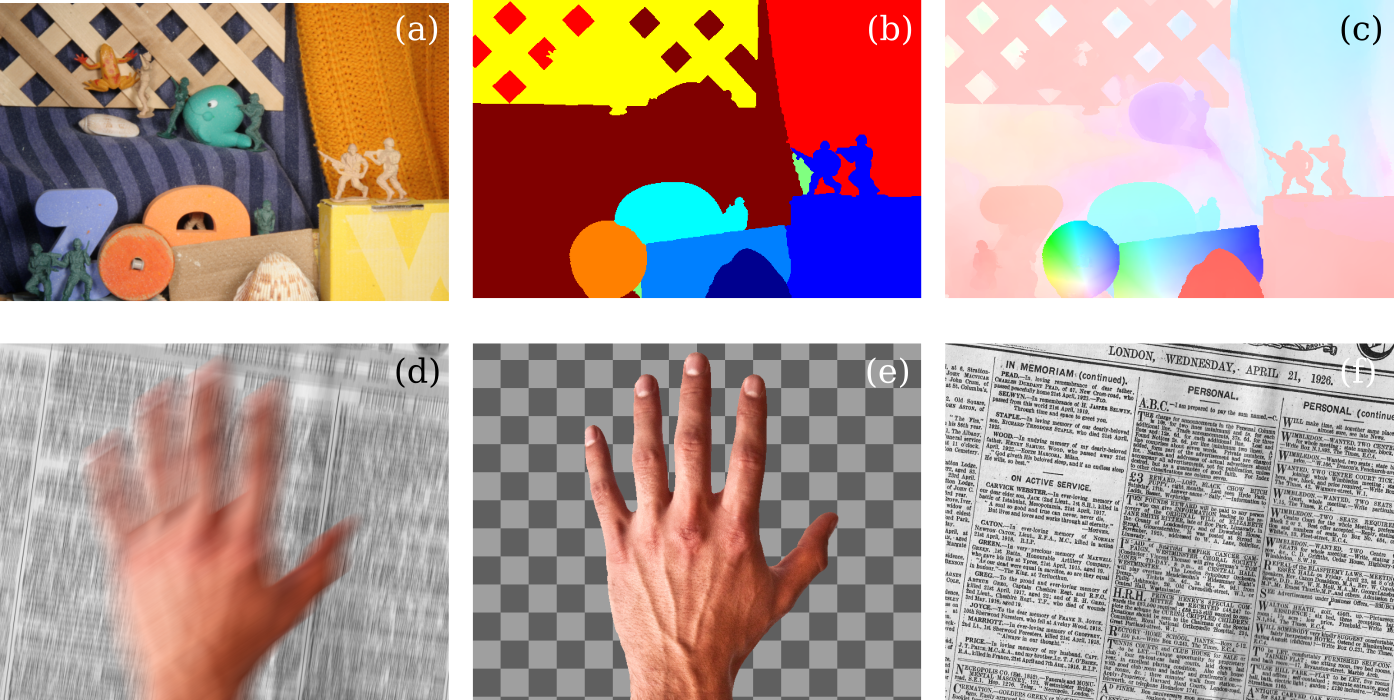

Top row: From a sequence of images (a), we extract the layer assignments (b) and compute highly accurate flow (c), especially at motion boundaries. Bottom row: Using a layered model, a motion-blurred sequence (d) can be decomposed into foreground (e) and background (f), which can then be separately deblurred.

Layered models allow scene segmentation and motion estimation to be formulated together and to inform one another. They separate the problem of enforcing spatial smoothness of motion within objects from the problem of estimating motion discontinuities at surface boundaries. Furthermore, layers define a depth ordering, allowing us to reason about occlusions.

In [ ], we present an optical flow algorithm that segments the scene into layers, estimates the number of layers, and reason about their relative depth ordering using a novel discrete approximation of the continuous objective in terms of a sequence of depth-ordered MRFs and extended graph-cut optimization methods. We extend layer flow estimation over time, enforcing temporal coherence on the layer segmentation and show that this improves accuracy at motion boundaries.

In [ ], we extend the layer segmentation algorithm using a densely connected Conditional Random Field. To segment the video, the CRF can use evidence from any location in the image, not just from the immediate surroundings of a pixel. Additionally, the CRF drastically reduces runtime of the segmentation step, while preserving the high fidelity at motion boundaries.

PCA-Layers [ ] combines a layered approach with a fast, approximate optical flow algorithm. Within each layer, the optical flow is smooth and can be expressed using low spatial frequencies. Sharp discontinuities at surface boundaries, on the other hand, are captured by the layered formulation, and therefore do not need to be modeled in the spatial structure of the flow itself, allowing highly efficient layered flow computation.

We also use layered models in the treatment of motion blur [ ]. In a dynamic scene, objects can move and occlude each other. Together with the nonzero shutter speed of the camera, this creates motion blur, which can be complex close to object boundaries; pixel values arise as a combination of foreground and background. Using a layered model allows us to separate overlapping layers from each other, making it possible to simultaneously segment the scene compute optical flow in the presence of motion blur, and deblur each layer independently.