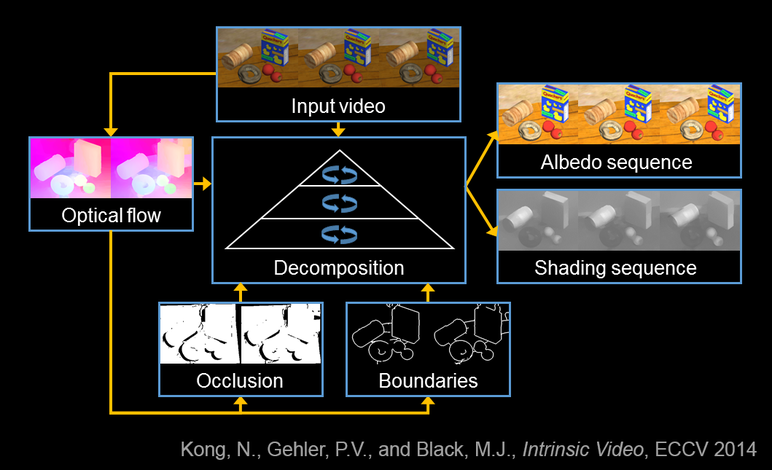

Intrinsic images such as albedo and shading are valuable for later stages of visual processing. Previous methods for extracting albedo and shading use either single images or images together with depth data. Instead, we defineintrinsic video estimation as the problem of extracting temporally coherent albedo and shading from video alone. Our approach exploits the assumption that albedo is constant over time while shading changes slowly. Optical flow aids in the accurate estimation of intrinsic video by providing temporal continuity as well as putative surface boundaries. Additionally, we find that the estimated albedo sequence can be used to improve optical flow accuracy in sequences with changing illumination. The approach makes only weak assumptions about the scene and we show that it substantially outperforms existing single-frame intrinsic image methods. We evaluate this quantitatively on synthetic sequences as well on challenging natural sequences with complex geometry, motion, and illumination.

We publish the dataset for

Kong, N., Gehler, P.V. and Black, M.J.: "Intrinsic Video,"

in Computer Vision – ECCV 2014, Springer International Publishing,

volume 8690, Lecture Notes in Computer Science, pages 360-375, September 2014.

|

Dataset |

Results of our method (IV) |

Numerical evaluation code |

Please check the readme for details and important notes.

If you use this, please cite our paper: bib

planning to release code (improved version) soon