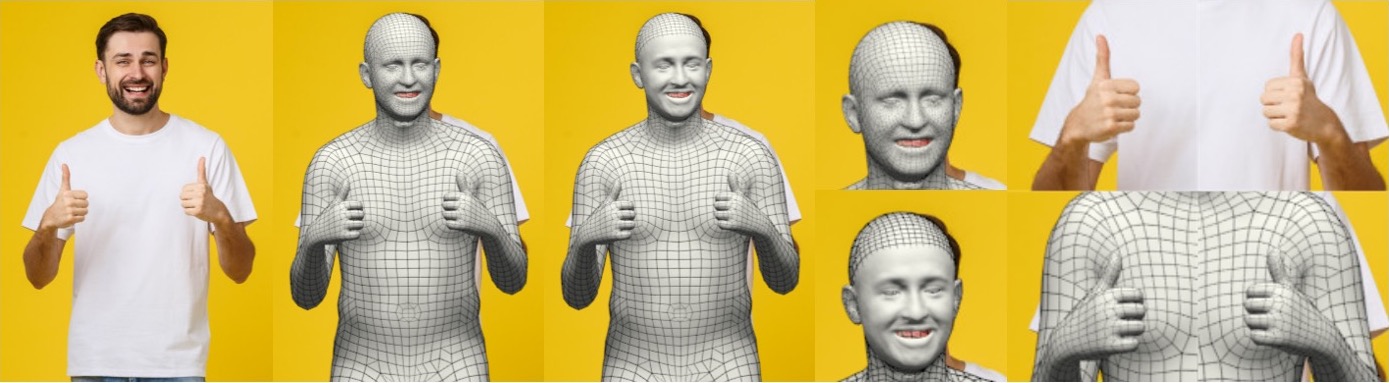

Bodies are not a collection of joints. Bodies have shape, can move, can express emotion, and can interact with the world. Hence virtual humans need emotional faces, realistic hands, and the ability to move and use them. Here we combine the SMPL-X body with a detailed face model (DECA) and train a regressor (PIXIE) to robustly recover body shape and pose, detailed face shape, and accurate hand pose from a single image.

Until recently, human pose has often been represented by 10-12 body joints in 2D or 3D. This is inspired by Johannson's moving light displays, which showed that some human actions can be recognized from the motion of the major joints of the body. We have argued that such representations are too impoverished to model human behavior. Humans express their emotions through the surface of their face and manipulate the world through the surface of their bodies.

Consequently, Perceiving Systems has focused on modeling and inferring 3D human pose and shape (HPS) using expressive 3D body models that capture the surface of the body either explicitly as a mesh or implicitly as a neural network. Such 3D shape models allow us to capture human-scene contact and provide information about a person related to their health, age, fitness, and clothing size.

We introduced the SMPL body model in 2015 [ ] and made it available for research and commercial licensing. SMPL is realistic, efficient, posable, and compatible with most graphics packages. It is also differentiable and easy to integrate into optimization or deep learning methods. Since its release, it has become the de facto standard in the field and is widely used in industry and academia.

SMPL also has limitations, some of which have been addressed by STAR [ ], which is learned from thousands more 3D body scans and has local pose corrective blend shapes.

We have steadily improved on SMPL adding hands and faces to create SMPL-X [ ]. Most recently we have combined this with the detailed facial model from DECA [ ] to increase expressive realism. We always combine these models with methods to estimate them from images. Our most recent neural regression recent method, PIXIE [ ], uses a moderator to assess the reliability of face and hand regressors before integrating the body, face, and hand features.

Current work is extending these models to include clothing. For example, CAPE [ ] uses a convolutional mesh VAE to learn a generative model of clothing that is compatible with SMPL. See also our work on learning implicit models of clothed 3D humans.

This work on modeling humans is the foundation for our analysis of human movement, emotion, and behavior.

ExPose (ECCV 2020) dataset (link)

ExPose (ECCV 2020) dataset (link)

A curated dataset that contains 32.617 pairs of:

- an in-the-wild RB image, and

- an expressive whole-body 3D human reconstruction (SMPL-X).

The dataset can be used to train models that predict expressive 3D

human bodies, from a single RB image as input, similar to ExPose.