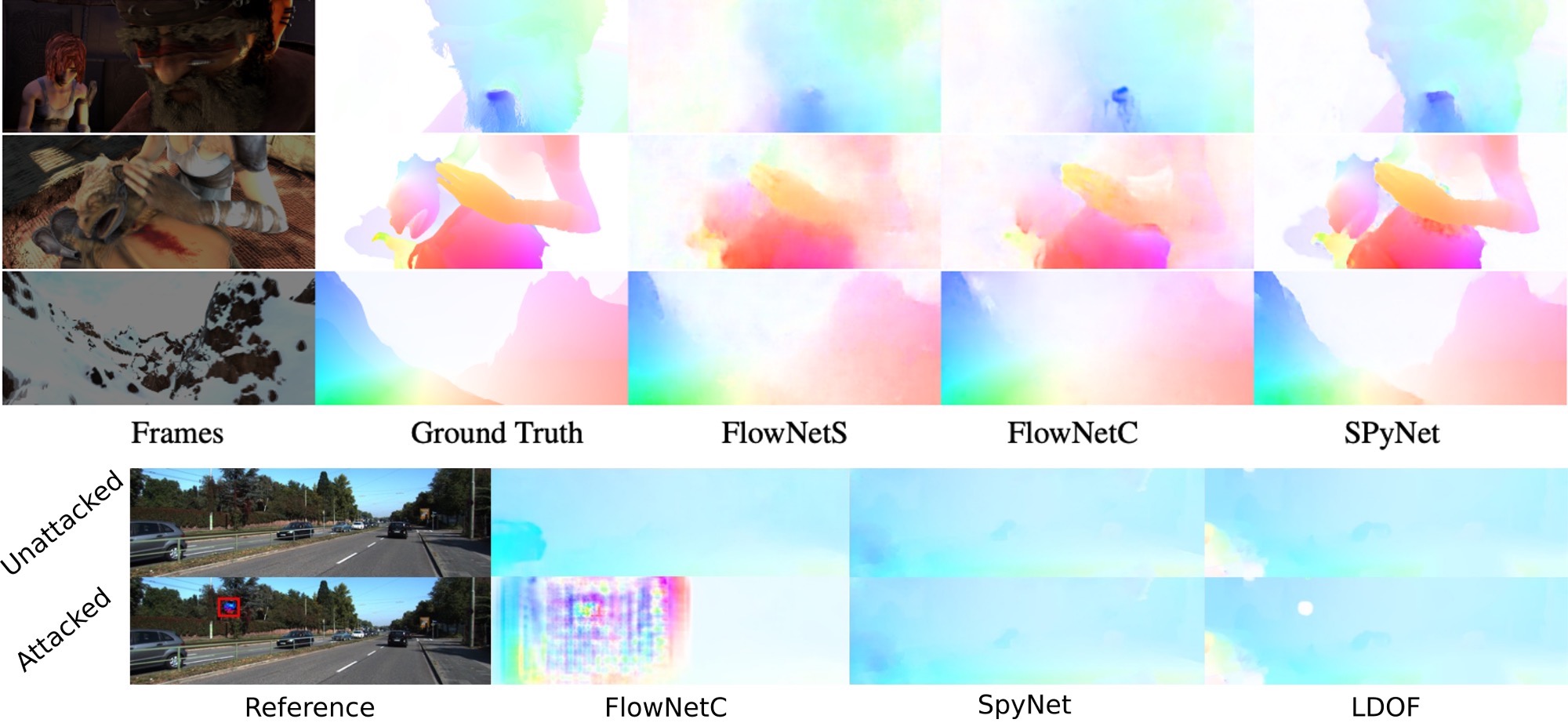

Top: Deep learning methods like SpyNet [ ] now dominate optical flow estimation. Bottom: Unfortunately, many of these methods are easily attacked [ ]. A small adversarial image patch (in the red square) can disrupt large parts of the flow field.

Optical flow is the projection of the 3D motion field into the 2D image plane and it is useful for a variety of applications. Advances in deep learning have rapidly improved the accuracy of optical flow methods. Despite this, they have several limitations that we have worked to resolve.

To deal with large image motions in a compact network, we developed the Spatial Pyramid Network (SpyNet) [ ], which computes optical flow by combining a classical coarse-to-fine flow approach with deep learning. At each level of a spatial pyramid, the deep network computes an update to the current flow estimate. SpyNet is 96% smaller than FlowNet, is very fast, and can be trained end-to-end, making it easy to incorporate into other networks for tasks like action recognition [ ].

We discovered that many existing deep flow networks are not robust to adversarial attacks, even when only a small portion of the image is corrupted [ ]. We learn an optimal pattern that, when placed in the image, can cause widespread errors in the flow. This gives insights into the inner workings of these networks, points out potential risks, and suggests a path to making them more robust.

Deep networks also require significant amounts of training data, yet there are no sensors that give ground truth optical flow for real image sequences and synthetic data is currently unrealistic. Consequently, have developed methods for unsupervised learning.

To that end, we exploit the geometric structure of optical flow in rigid scenes. With Competitive Collaboration [ ] we train four different networks that estimate monocular depth, camera pose, optical flow and non-rigid motion segmentation. These models compete and collaborate to explain the motion in the scene, producing accurate optical flow without explicit supervision.

The lack of proper occlusion handling in commonly used data terms is a major source of error in existing unsupervised methods. To address this, we use three consecutive frames to strengthen the photometric loss and explicitly reason about occlusions [ ]. Our multi-frame formulation outperforms existing unsupervised two-frame methods and even produces results on par with some fully supervised methods.

Additionally, motion occlusion boundaries give important information about scene structure and we have worked on learning to detect these [ ].