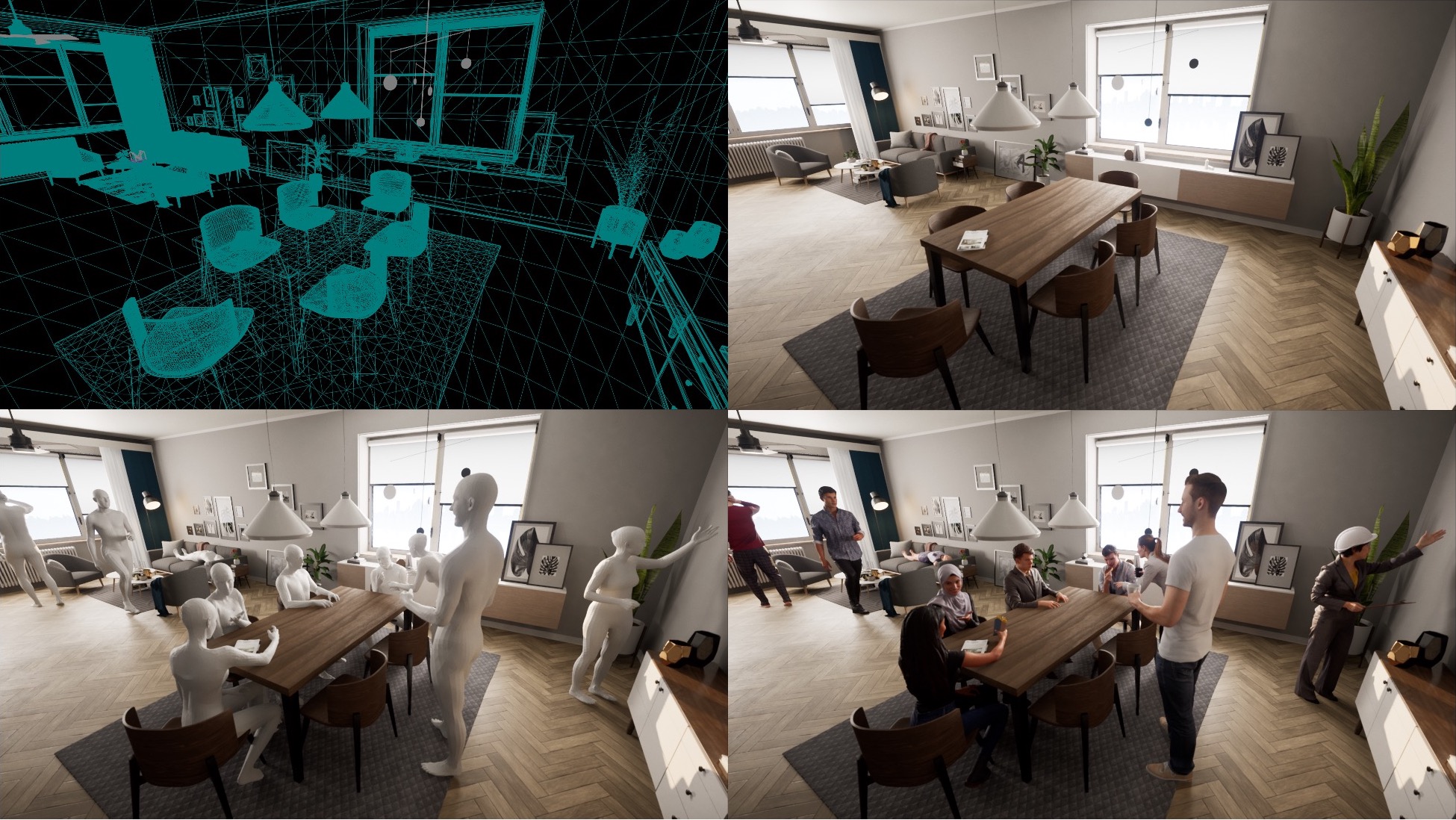

Given a novel 3D scene, we want to populate it with realistic digital humans performing actions that make sense. Here, POSA [ ] automatically places SMPL-X bodies in a 3D scene using a novel body-centric human-scene interaction model. These bodies are then replaced by commercial 3D scans.

Much of the work in Perceiving Systems focuses on the capture and modeling of humans and their motion. How do we know if our models are any good? What does it mean to have a good model of humans and their behavior? We argue for something akin to a Turing Test for avatars.

Specifically, given a novel 3D scene, a digital human should be able to interact with that scene in a way that is indistinguishable from real human behavior. We initiated this new line of research in 2021 with our paper on "Generating 3D People in Scenes without People" [ ] and believe that it will be critical to many applications including computer games, animation, XR, and the Metaverse.

While these applications are important, our interest in putting people into scenes is deeper. Our ability, as humans, to perceive and interact with the world is essential for survival and at the core of what it means to be human. If we can model how virtual 3D people interact with 3D scenes, we will have a testable model of ourselves.

This is fundamentally an AI-complete problem and, consequently, is a long-term research goal. While fully realistic digital humans remain may be far off, we are making concrete progress today. Our core research is focused in two directions: neural rendering to create realistic looking humans and putting 3D people into 3D scenes with natural behavior.