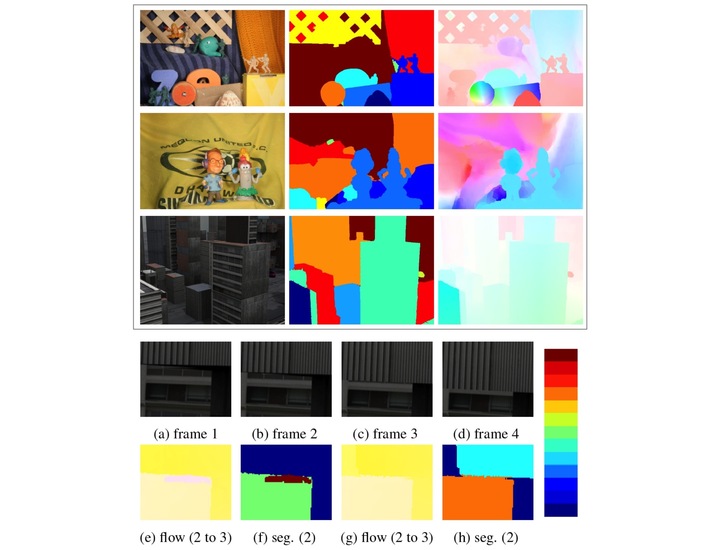

Top: Estimated flow fields and scene structure on some Middlebury test sequences. Row 1: our nLayers method separates the claw of the frog from the background and recovers the fine flow structure. Row 2: nLayers separates the foreground figures of “Mequon” from the background and produces sharp motion boundaries; However, the non-rigid motion of the cloth violates the layered assumption and causes errors in estimated flow fields. Row 3: by correctly recovering the scene structure, nLayers achieves very low motion boundary errors on the “Urban” sequence; a part of the building in the bottom left corner moves out of the image boundary and its motion is predicted by the affine model. However the building’s motion violates the affine assumption, resulting in errors in the estimated motion.

Bottom: Benefits of multiple frames. Occlusion reasoning using frames 2 and 3 (e-f) is hard (detail from Urban3); enforcing temporal coherence of the support functions using 4 frames significantly reduces the errors in both the flow field and the segmentation (g-h). The flow field is from frame 2 to frame 3 and the segmentation is for frame 2.

Color key shows depth ordering of layers for farthest (blue) to closest (red).

The segmentation of scenes into regions of coherent structure and the estimation of image motion are fundamental problems in computer vision which are often treated separately. When available, motion provides an important cue for identifying the surfaces in a scene and for differentiating image texture from physical structure. This project addresses the principled combination of motion segmentation and static scene segmentation using a layered model.

Layered models offer an elegant approach to motion segmentation and have many advantages. A typical scene consists of very few moving objects and representing each moving object by a layer allows the motion of each layer to be described more simply. Such a representation can explicitly model the occlusion relationships between layers making the detection of occlusion boundaries possible.

Previous methods, however, have failed to capture the structure of complex scenes, provide precise object boundaries, effectively estimate the number of layers in a scene, or robustly determine the depth order of the layers. Furthermore, previous methods have focused on optical flow between pairs of frames rather than longer sequences.

We introduce a new layered model of moving scenes in which the layer segmentations enable the integration of motion over time. This results in improved optical flow estimates, disambiguation of local depth orderings, and correct interpretation of occlusion boundaries. Furthermore, we show that image sequences with more frames are needed to resolve ambiguities in depth ordering at occlusion boundaries; temporal layer constancy makes this feasible.

One key issue is that the layer-structure inference problem is difficult to optimize. Most methods adopt an expectation maximization (EM) style algorithm that is susceptible to local optima. Solving the layered-flow inference problem requires an optimization method that can make large changes to the solution at a single step, a task more suitable for discrete optimization. Hence we propose a discrete layered model based on a sequence of ordered Markov random fields (MRFs). This model, unlike standard Ising/Potts MRFs, cannot be directly solved by "off-the-shelf" optimizers, such as graph cuts. Therefore we develop a sequence of non-standard "moves" that can simultaneously change the states of several binary MRFs. We also embed continuous flow estimation into the discrete framework to adapt the state space to estimate sub-pixel motion. The resultant discrete-continuous scheme enables us to infer the number of layers and their depth ordering automatically for a sequence.

Previous work on layered motion estimation considers optical flow between only two frames. Unfortunately, with only two frames, depth ordering at occlusion boundaries is fundamentally ambiguous. Critically, our approach is formulated to estimate optical flow over time. By estimating layer segmentations over three or more frames we obtain a reliable depth ordering of the layers and more accurate motion estimates.

In summary, our contributions include

- formulating a discrete layered model based on a sequence of ordered Ising MRFs and devising a set of non-standard moves to optimize it;

- formulating methods for automatically determining the number of layers and their depth ordering for a given sequence;

- concretely improving layer segmentation on a set of real-world sequences;

- demonstrating the benefits of using more frames for optical flow estimation on the Middlebury optical flow benchmark.

The method suffers from poor computational efficiency. Our current research is focused on efficient optimization methods that can be applied to longer image sequences.