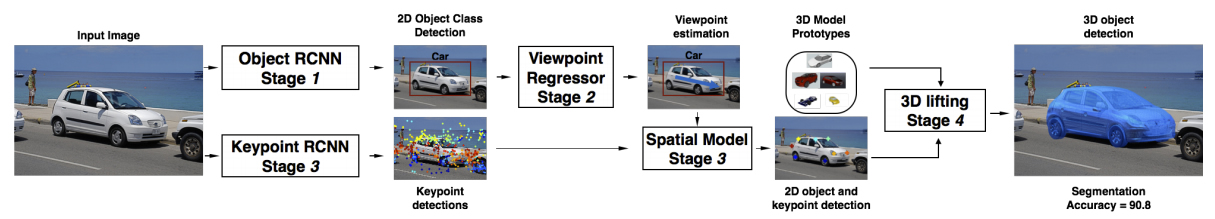

An overview of our 3D object detection model. This model localizes objects and infers their articulation and 3D shape from single static images. Objects of different categories can be detected in cluttered environments.

The ability to recognize and categorize objects in any type of visual scene is an integral part of scene recognition systems. While for constrained scenarios, like face detection, this problem has largely been solved, the general case of recognizing any kind of object in real world and cluttered environments remains an open research problem. Many different factors contribute to the complexity of this problem. A main complicating factor is in images one has access to 2D projections of objects, whereas they really are three dimensional physical objects. This projection leads to significant ambiguity in object appearance. Therefore the predominant paradigm today, is to largely ignore the 3D structure, and attack object class recognition using 2D feature-based models.

In contrast to this we investigate models that take into account the 3D structure of objects. We believe that building models that encode the three dimensional origin of real world objects has several benefits. It leads to more compact computational models that therefore will need less training data during a training phase. Further, the output of 3D object detection systems will enable richer reasoning about entire visual scenes. A simple bounding box around objects of interest may be sufficient for counting and coarse localizing of objects. However, we aim to recover articulations and the 3D extent of objects together with a precise localization and categorization. This richer information is needed in order to extract knowledge about object compositions in a scene as a whole.

In the last years we have made progress towards this goal. The work [ ] proposes methods that perform 3D bounding box detection from 2D images. We extend a state-of-the-art model, namely the Deformable Parts Model (DPM) from Felzenszwalb et al., to a full 3D object model. The DPM is a mixture of star based CRF models that include deformation and appearance terms. We propose a CRF that models an object directly in 3D and that can be evaluated using any image projection. Then, for novel unseen images, the object identity, its localization and the projection from 3D to 2D is reasoned about. Since most benchmarks promote object detection as a 2D detection task, and we use only 2D training data, we use CAD models to inform our detection model about the 3D structure of objects. This enables reasoning about occlusion [ ] and transfer learning of geometric information across different object instances [ ].

In [ ] we extended our work into a system that is capable of extracting detailed CAD models from unconstrained images. We combine several estimation steps that infer viewpoint, object identity and position into a coherent system that results in very fine grained and detailed hypotheses about the objects present in the scene. Following careful design, in each stage the method constantly improves the performance and achieves state-of-the-art performance in simultaneous 2D bounding box and viewpoint estimation on the challenging Pascal3D+ dataset.