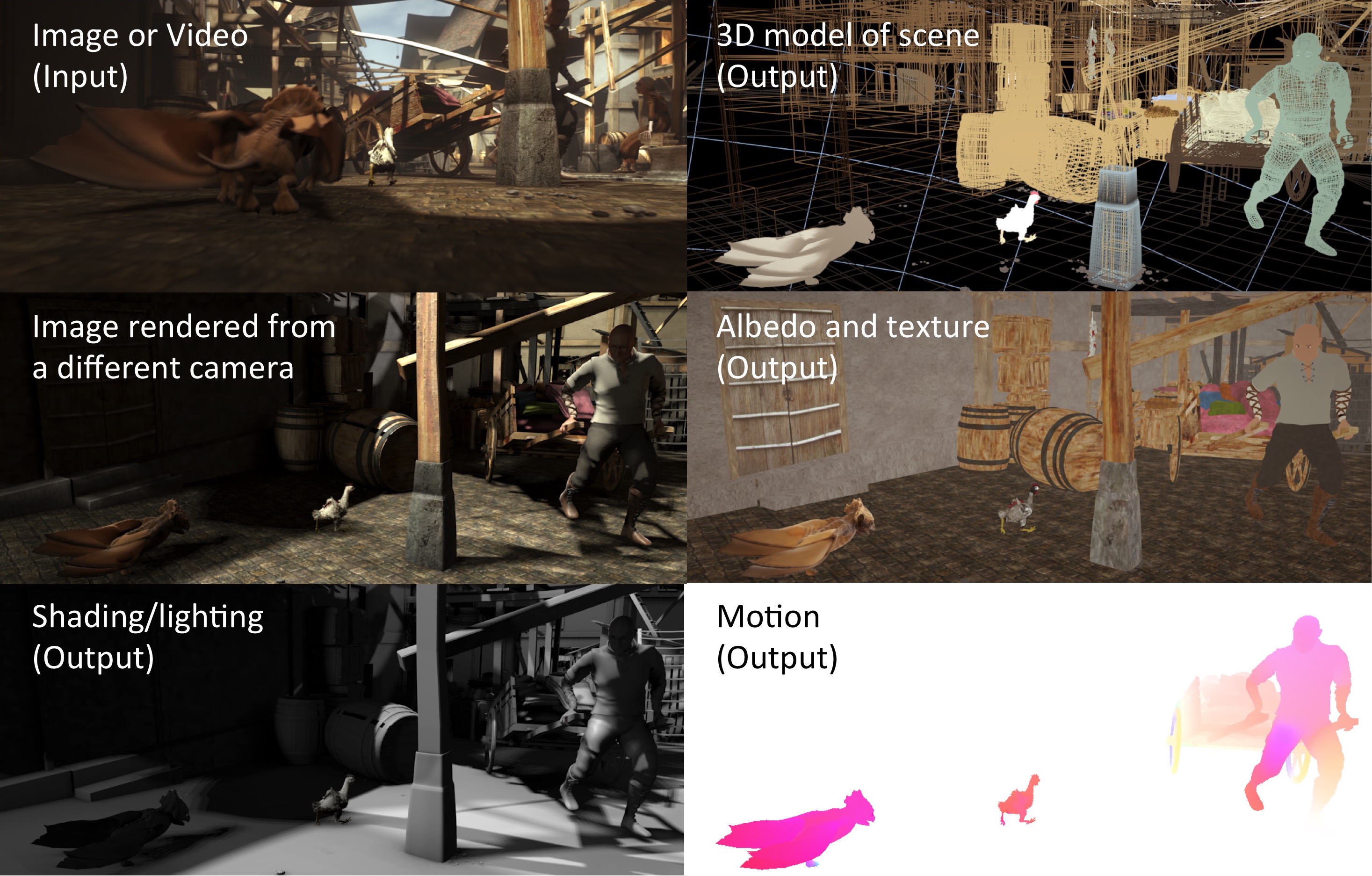

Illustrative (synthetic) example of inverting the rendering process to take and image sequence and produce the graphics models that generate it.

Computer vision as analysis by synthesis has a long tradition and remains central to a wide class of generative methods. In this top-down approach, vision is formulated as the search for parameters of a model that is rendered to produce an image (or features of an image), which is then compared with image pixels (or features). The model can take many forms of varying realism but, when the model and rendering process are designed to produce realistic images, this process is often called inverse graphics. In a sense, the approach tries to reverse-engineer the physical process that produced an image of the world.

Recent advances in graphics hardware, open source renderers, and probabilistic programming is making this approach viable. We are addressing inverse rendering in multiple projects that use autodifferentiation and stochastic sampling to solve different aspects of the problem. For example, the OpenDR framework is widely used in much of our research on human body modeling. It allows us to very quickly formulate a problem and prototype a solution.

We also approach the problem from the "bottom up"; that is, from images and videos we extract intrinsic images, which represent physical properties of the scene tied to the pixel grid. These provide a generative model of images (or video) and can be used as an intermediate representation between graphics models and images.