2025

OpenCapBench: A Benchmark to Bridge Pose Estimation and Biomechanics

Gozlan, Y., Falisse, A., Uhlrich, S., Gatti, A., Black, M., Chaudhari, A.

In IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , February 2025 (inproceedings)

2024

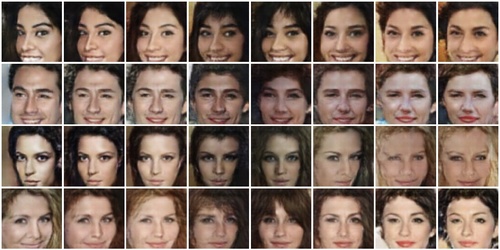

SPARK: Self-supervised Personalized Real-time Monocular Face Capture

Baert, K., Bharadwaj, S., Castan, F., Maujean, B., Christie, M., Abrevaya, V., Boukhayma, A.

In SIGGRAPH Asia 2024 Conference Proceedings, December 2024 (inproceedings) Accepted

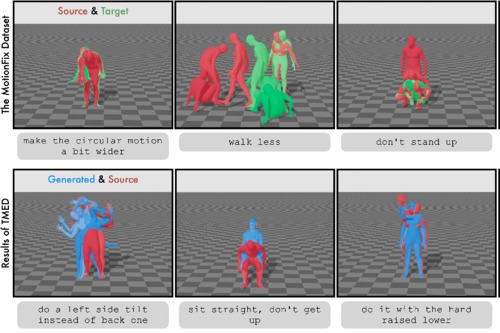

MotionFix: Text-Driven 3D Human Motion Editing

Athanasiou, N., Cseke, A., Diomataris, M., Black, M. J., Varol, G.

In SIGGRAPH Asia 2024 Conference Proceedings, ACM, December 2024 (inproceedings) To be published

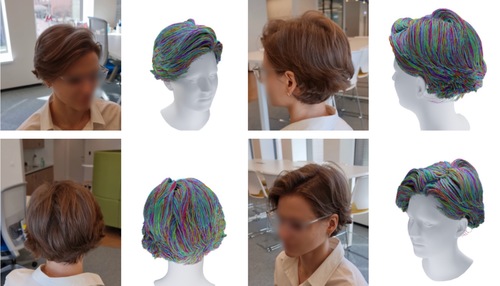

Human Hair Reconstruction with Strand-Aligned 3D Gaussians

Zakharov, E., Sklyarova, V., Black, M. J., Nam, G., Thies, J., Hilliges, O.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, October 2024 (inproceedings)

Stable Video Portraits

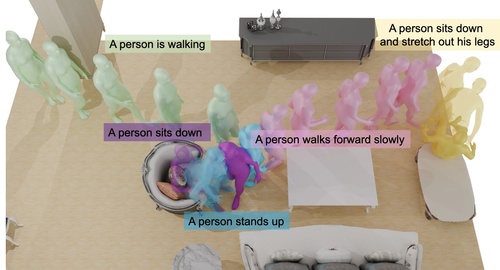

Generating Human Interaction Motions in Scenes with Text Control

Yi, H., Thies, J., Black, M. J., Peng, X. B., Rempe, D.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, October 2024 (inproceedings)

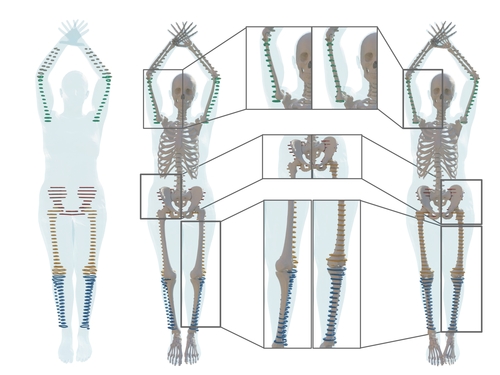

On predicting 3D bone locations inside the human body

Dakri, A., Arora, V., Challier, L., Keller, M., Black, M. J., Pujades, S.

In 26th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), October 2024 (inproceedings)

Synthesizing Environment-Specific People in Photographs

Ostrek, M., O’Sullivan, C., Black, M., Thies, J.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, October 2024 (inproceedings) Accepted

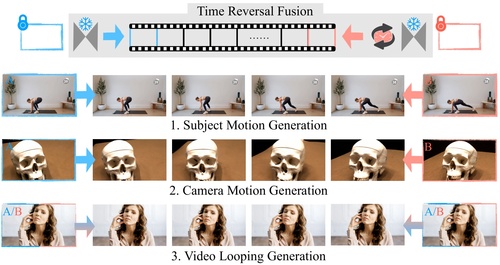

Explorative Inbetweening of Time and Space

Feng, H., Ding, Z., Xia, Z., Niklaus, S., Fernandez Abrevaya, V., Black, M. J., Zhang, X.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, October 2024 (inproceedings)

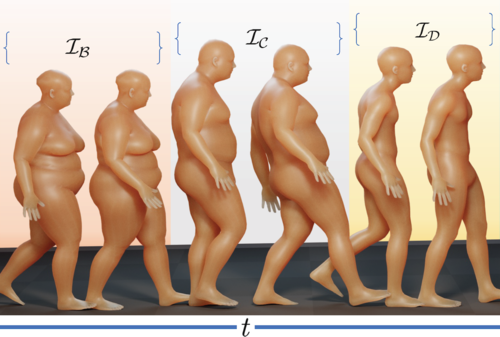

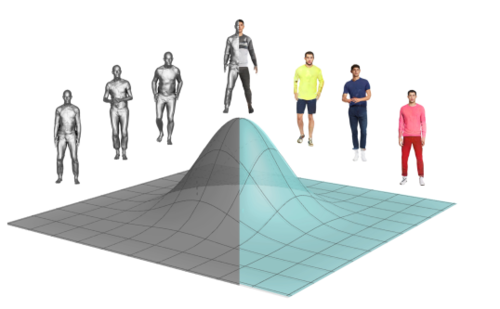

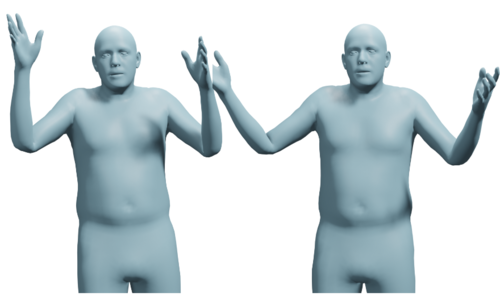

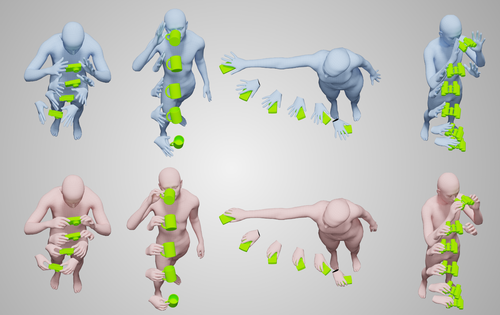

HUMOS: Human Motion Model Conditioned on Body Shape

Tripathi, S., Taheri, O., Lassner, C., Black, M. J., Holden, D., Stoll, C.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, October 2024 (inproceedings)

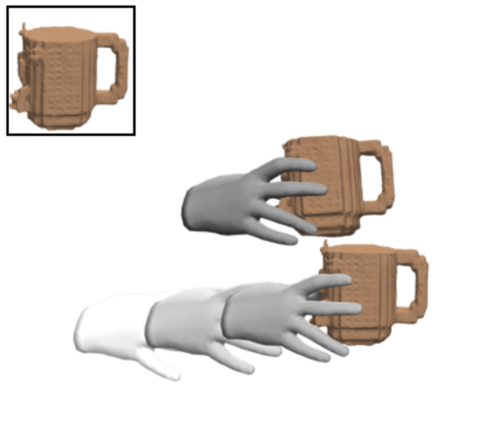

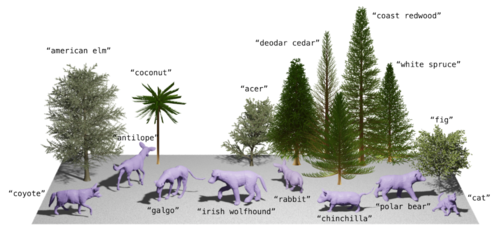

GraspXL: Generating Grasping Motions for Diverse Objects at Scale

Zhang, H., Christen, S., Fan, Z., Hilliges, O., Song, J.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, September 2024 (inproceedings) Accepted

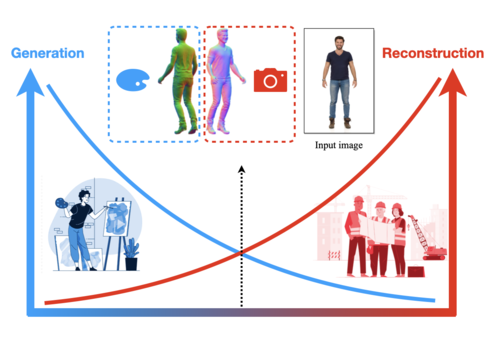

Leveraging Unpaired Data for the Creation of Controllable Digital Humans

Realistic Digital Human Characters: Challenges, Models and Algorithms

Benchmarks and Challenges in Pose Estimation for Egocentric Hand Interactions with Objects

Fan, Z., Ohkawa, T., Yang, L., Lin, N., Zhou, Z., Zhou, S., Liang, J., Gao, Z., Zhang, X., Zhang, X., Li, F., Zheng, L., Lu, F., Zeid, K. A., Leibe, B., On, J., Baek, S., Prakash, A., Gupta, S., He, K., Sato, Y., Hilliges, O., Chang, H. J., Yao, A.

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, September 2024 (inproceedings) Accepted

AWOL: Analysis WithOut synthesis using Language

ContourCraft: Learning to Resolve Intersections in Neural Multi-Garment Simulations

Grigorev, A., Becherini, G., Black, M., Hilliges, O., Thomaszewski, B.

In ACM SIGGRAPH 2024 Conference Papers, pages: 1-10, SIGGRAPH ’24, Association for Computing Machinery, New York, NY, USA, July 2024 (inproceedings)

Modelling Dynamic 3D Human-Object Interactions: From Capture to Synthesis

Airship Formations for Animal Motion Capture and Behavior Analysis

(Best Paper)

Proceedings 2nd International Conference on Design and Engineering of Lighter-Than-Air systems (DELTAS2024), June 2024 (conference) Accepted

EMAGE: Towards Unified Holistic Co-Speech Gesture Generation via Expressive Masked Audio Gesture Modeling

Liu, H., Zhu, Z., Becherini, G., Peng, Y., Su, M., Zhou, Y., Zhe, X., Iwamoto, N., Zheng, B., Black, M. J.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

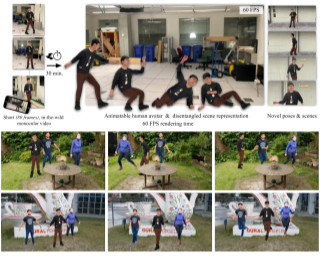

HUGS: Human Gaussian Splats

Kocabas, M., Chang, R., Gabriel, J., Tuzel, O., Ranjan, A.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

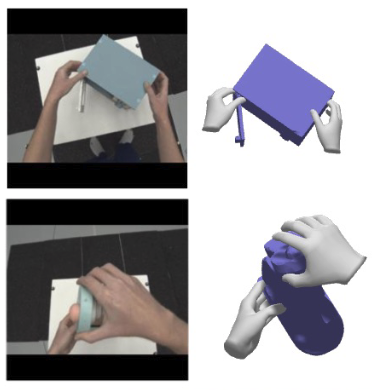

HOLD: Category-agnostic 3D Reconstruction of Interacting Hands and Objects from Video

(Highlight)

Fan, Z., Parelli, M., Kadoglou, M. E., Kocabas, M., Chen, X., Black, M. J., Hilliges, O.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

SCULPT: Shape-Conditioned Unpaired Learning of Pose-dependent Clothed and Textured Human Meshes

Sanyal, S., Ghosh, P., Yang, J., Black, M. J., Thies, J., Bolkart, T.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pages: 2362-2371, June 2024 (inproceedings)

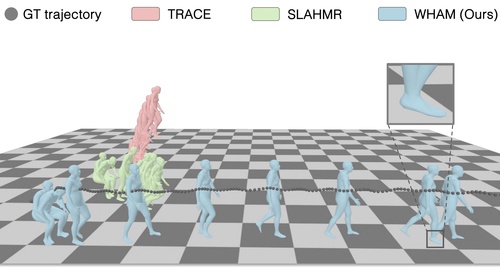

WHAM: Reconstructing World-grounded Humans with Accurate 3D Motion

Shin, S., Kim, J., Halilaj, E., Black, M. J.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

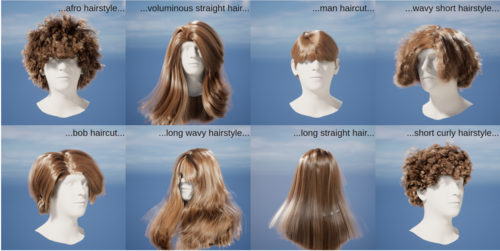

Text-Conditioned Generative Model of 3D Strand-based Human Hairstyles

Sklyarova, V., Zakharov, E., Hilliges, O., Black, M. J., Thies, J.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

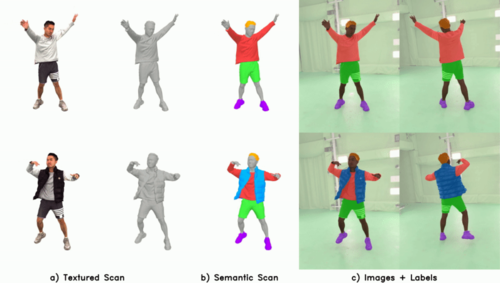

4D-DRESS: A 4D Dataset of Real-World Human Clothing With Semantic Annotations

Wang, W., Ho, H., Guo, C., Rong, B., Grigorev, A., Song, J., Zarate, J. J., Hilliges, O.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

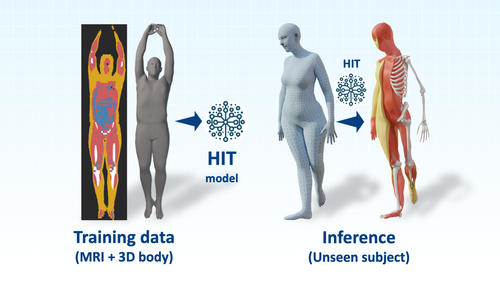

HIT: Estimating Internal Human Implicit Tissues from the Body Surface

Keller, M., Arora, V., Dakri, A., Chandhok, S., Machann, J., Fritsche, A., Black, M. J., Pujades, S.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pages: 3480-3490, June 2024 (inproceedings)

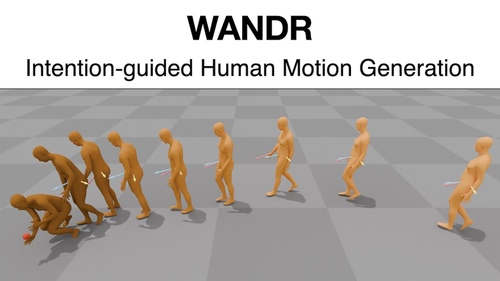

WANDR: Intention-guided Human Motion Generation

Diomataris, M., Athanasiou, N., Taheri, O., Wang, X., Hilliges, O., Black, M. J.

In 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages: 927,936, IEEE Computer Society, June 2024 (inproceedings)

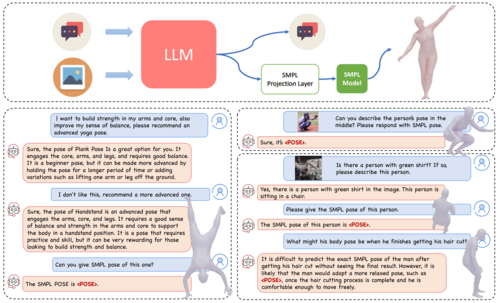

ChatPose: Chatting about 3D Human Pose

Feng, Y., Lin, J., Dwivedi, S. K., Sun, Y., Patel, P., Black, M. J.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

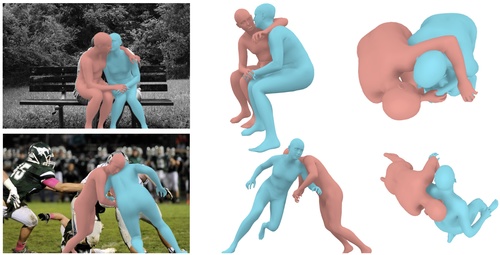

Generative Proxemics: A Prior for 3D Social Interaction from Images

Müller, L., Ye, V., Pavlakos, G., Black, M., Kanazawa, A.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

VAREN: Very Accurate and Realistic Equine Network

Zuffi, S., Mellbin, Y., Li, C., Hoeschle, M., Kjellstrom, H., Polikovsky, S., Hernlund, E., Black, M. J.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

TokenHMR: Advancing Human Mesh Recovery with a Tokenized Pose Representation

Dwivedi, S. K., Sun, Y., Patel, P., Feng, Y., Black, M. J.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

MonoHair: High-Fidelity Hair Modeling from a Monocular Video

(Oral)

Wu, K., Yang, L., Kuang, Z., Feng, Y., Han, X., Shen, Y., Fu, H., Zhou, K., Zheng, Y.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

AMUSE: Emotional Speech-driven 3D Body Animation via Disentangled Latent Diffusion

Chhatre, K., Daněček, R., Athanasiou, N., Becherini, G., Peters, C., Black, M. J., Bolkart, T.

In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages: 1942-1953, June 2024 (inproceedings)

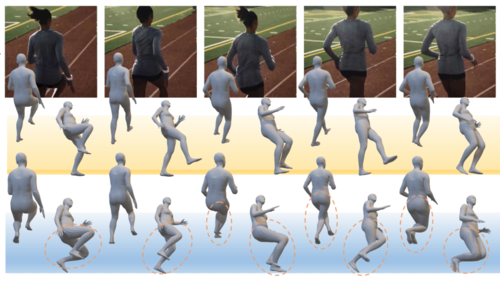

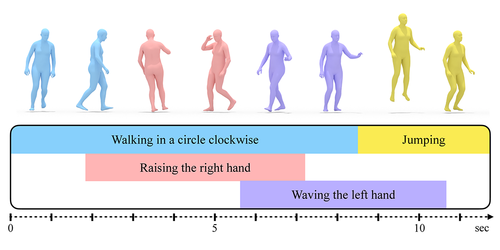

Multi-Track Timeline Control for Text-Driven 3D Human Motion Generation

Petrovich, M., Litany, O., Iqbal, U., Black, M. J., Varol, G., Peng, X. B., Rempe, D.

In CVPR Workshop on Human Motion Generation, Seattle, June 2024 (inproceedings)

A Unified Approach for Text- and Image-guided 4D Scene Generation

Zheng, Y., Li, X., Nagano, K., Liu, S., Hilliges, O., Mello, S. D.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

Neuropostors: Neural Geometry-aware 3D Crowd Character Impostors

Ostrek, M., Mitra, N. J., O’Sullivan, C.

In 2024 27th International Conference on Pattern Recognition (ICPR), Springer, June 2024 (inproceedings) Accepted

Real-time Monocular Full-body Capture in World Space via Sequential Proxy-to-Motion Learning

Zhang, H., Zhang, Y., Hu, L., Zhang, J., Yi, H., Zhang, S., Liu, Y.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

SMIRK: 3D Facial Expressions through Analysis-by-Neural-Synthesis

Retsinas, G., Filntisis, P. P., Danecek, R., Abrevaya, V. F., Roussos, A., Bolkart, T., Maragos, P.

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), June 2024 (inproceedings)

Ghost on the Shell: An Expressive Representation of General 3D Shapes

Liu, Z., Feng, Y., Xiu, Y., Liu, W., Paull, L., Black, M. J., Schölkopf, B.

In Proceedings of the Twelfth International Conference on Learning Representations, May 2024 (inproceedings)

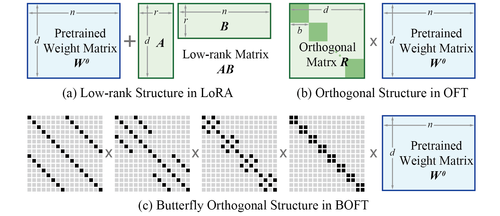

Parameter-Efficient Orthogonal Finetuning via Butterfly Factorization

Liu, W., Qiu, Z., Feng, Y., Xiu, Y., Xue, Y., Yu, L., Feng, H., Liu, Z., Heo, J., Peng, S., Wen, Y., Black, M. J., Weller, A., Schölkopf, B.

In Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (inproceedings)

TADA! Text to Animatable Digital Avatars

Liao, T., Yi, H., Xiu, Y., Tang, J., Huang, Y., Thies, J., Black, M. J.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings) Accepted

POCO: 3D Pose and Shape Estimation using Confidence

Dwivedi, S. K., Schmid, C., Yi, H., Black, M. J., Tzionas, D.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings)

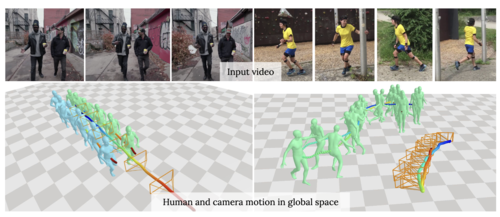

PACE: Human and Camera Motion Estimation from in-the-wild Videos

Kocabas, M., Yuan, Y., Molchanov, P., Guo, Y., Black, M. J., Hilliges, O., Kautz, J., Iqbal, U.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings)

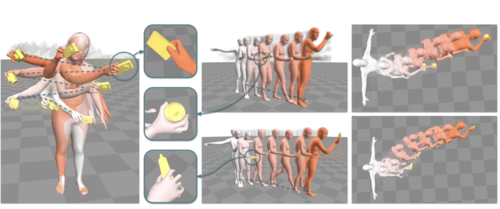

Physically plausible full-body hand-object interaction synthesis

Braun, J., Christen, S., Kocabas, M., Aksan, E., Hilliges, O.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings)

TECA: Text-Guided Generation and Editing of Compositional 3D Avatars

Zhang, H., Feng, Y., Kulits, P., Wen, Y., Thies, J., Black, M. J.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings) To be published

ArtiGrasp: Physically Plausible Synthesis of Bi-Manual Dexterous Grasping and Articulation

Zhang, H., Christen, S., Fan, Z., Zheng, L., Hwangbo, J., Song, J., Hilliges, O.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings) Accepted

TeCH: Text-guided Reconstruction of Lifelike Clothed Humans

Huang, Y., Yi, H., Xiu, Y., Liao, T., Tang, J., Cai, D., Thies, J.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings) Accepted

GRIP: Generating Interaction Poses Using Spatial Cues and Latent Consistency

Taheri, O., Zhou, Y., Tzionas, D., Zhou, Y., Ceylan, D., Pirk, S., Black, M. J.

In International Conference on 3D Vision (3DV 2024), March 2024 (inproceedings)

Adversarial Likelihood Estimation With One-Way Flows

Ben-Dov, O., Gupta, P. S., Abrevaya, V., Black, M. J., Ghosh, P.

In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pages: 3779-3788, January 2024 (inproceedings)

Self- and Interpersonal Contact in 3D Human Mesh Reconstruction