AMUSE: Emotional Speech-driven 3D Body Animation via Disentangled Latent Diffusion

2024

Conference Paper

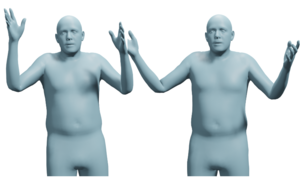

ps

Existing methods for synthesizing 3D human gestures from speech have shown promising results, but they do not explicitly model the impact of emotions on the generated gestures. Instead, these methods directly output animations from speech without control over the expressed emotion. To address this limitation, we present AMUSE, an emotional speech-driven body animation model based on latent diffusion. Our observation is that content (i.e., gestures related to speech rhythm and word utterances), emotion, and personal style are separable. To account for this, AMUSE maps the driving audio to three disentangled latent vectors: one for content, one for emotion, and one for personal style. A latent diffusion model, trained to generate gesture motion sequences, is then conditioned on these latent vectors. Once trained, AMUSE synthesizes 3D human gestures directly from speech with control over the expressed emotions and style by combining the content from the driving speech with the emotion and style of another speech sequence. Randomly sampling the noise of the diffusion model further generates variations of the gesture with the same emotional expressivity. Qualitative, quantitative, and perceptual evaluations demonstrate that AMUSE outputs realistic gesture sequences. Compared to the state of the art, the generated gestures are better synchronized with the speech content and better represent the emotion expressed by the input speech.

| Author(s): | Kiran Chhatre and Radek Daněček and Nikos Athanasiou and Giorgio Becherini and Christopher Peters and Michael J. Black and Timo Bolkart |

| Book Title: | Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) |

| Pages: | 1942-1953 |

| Year: | 2024 |

| Month: | June |

| Department(s): | Perceiving Systems |

| Bibtex Type: | Conference Paper (inproceedings) |

| Paper Type: | Conference |

| Event Place: | Seattle, USA |

| Eprint: | 2312.04466 |

| State: | Published |

| URL: | https://amuse.is.tue.mpg.de |

| Additional (custom) Fields: | |

| archiveprefix: | arXiv |

| primaryclass: | cs.CV |

| Links: |

Project

Paper Code |

|

BibTex @inproceedings{Chhatre_2024_CVPR,

title = {{AMUSE}: Emotional Speech-driven {3D} Body Animation via Disentangled Latent Diffusion},

author = {Chhatre, Kiran and Daněček, Radek and Athanasiou, Nikos and Becherini, Giorgio and Peters, Christopher and Black, Michael J. and Bolkart, Timo},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {1942-1953},

month = jun,

year = {2024},

doi = {},

eprint = {2312.04466},

url = {https://amuse.is.tue.mpg.de},

month_numeric = {6}

}

|

|