Early Stopping Without a Validation Set

2017

Article

ps

pn

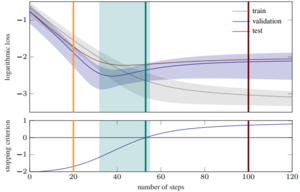

Early stopping is a widely used technique to prevent poor generalization performance when training an over-expressive model by means of gradient-based optimization. To find a good point to halt the optimizer, a common practice is to split the dataset into a training and a smaller validation set to obtain an ongoing estimate of the generalization performance. In this paper we propose a novel early stopping criterion which is based on fast-to-compute, local statistics of the computed gradients and entirely removes the need for a held-out validation set. Our experiments show that this is a viable approach in the setting of least-squares and logistic regression as well as neural networks.

| Author(s): | Maren Mahsereci and Lukas Balles and Christoph Lassner and Philipp Hennig |

| Journal: | arXiv preprint arXiv:1703.09580 |

| Year: | 2017 |

| Department(s): | Perceiving Systems, Probabilistic Numerics |

| Research Project(s): |

Efficient and Scalable Inference

|

| Bibtex Type: | Article (article) |

| Paper Type: | Journal |

| URL: | https://arxiv.org/abs/1703.09580 |

|

BibTex @article{Mahsereci:EarlyStopping:2017,

title = {Early Stopping Without a Validation Set},

author = {Mahsereci, Maren and Balles, Lukas and Lassner, Christoph and Hennig, Philipp},

journal = {arXiv preprint arXiv:1703.09580},

year = {2017},

doi = {},

url = {https://arxiv.org/abs/1703.09580}

}

|

|